The cost of free technology

Exploring the negative externalities of free software

Image Credit: Ben Garvin

As millions protest for the civil rights of Black Americans, I stand with you in support. We need to do better to end violent policing and a culture of systemic racism. I am not an expert on this topic, and for leadership, you should read these incredible letters by Barack Obama and Lee Pelton, President of Emerson College.

As a worker in tech, I’d like to discuss the hard tradeoffs of free technology on society and content moderation. We’ve had a whirlwind of a week. Let’s recap:

Negative sentiment around violent policing and police brutality reaches an all-time high after George Floyd homicide.

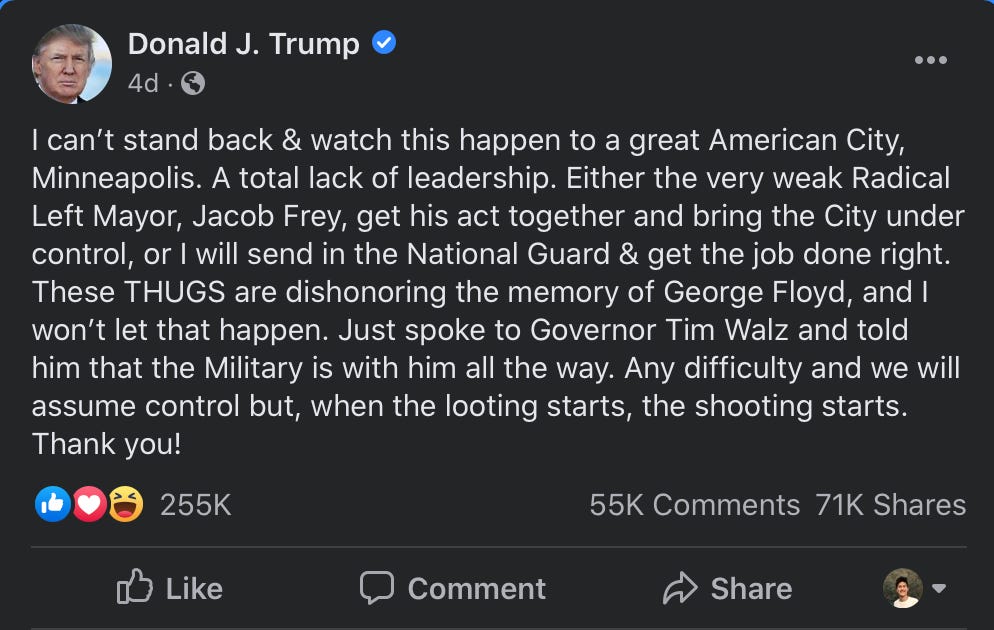

Trump posts a tweet and Facebook post hinting at the use of force and violence. “When the looting starts, the shooting starts.”

Twitter chooses to hide the tweet behind a disclaimer and also removes likes, retweets, and replies.

Facebook chooses not to take action on the post, getting 255K reactions, 55K comments, and 71K shares.

Hundreds of Facebook employees stage a virtual walkout in public protest of Facebook’s choice to let Trump’s inflammatory posts stand.

Historical precedents

"Those who do not learn from the past are doomed to repeat it."

Three incidents stand out to me in Facebook’s history of government leaders or hate speech groups inciting violence.

In 2017, Buddhist extremists used Facebook to inflame hatred and violence against the Rohingya minority in Myanmar at the cost of 25,000 deaths and 700,000 refugees fleeing the country. Facebook responded with better proactive detection of hate speech, hiring 100 Myanmar language experts, and removing ~600 Facebook entities misrepresenting state authorities.

In 2017, Libyan National Army (LNA) special forces commander, Mahmoud al-Werfalli uploaded a video to Facebook shooting dead three captured fighters. Facebook responded by removing the videos after an investigation.

In 2018, Sri Lanka officials blocked Facebook for three days after anti-Muslim sentiment shared on Facebook erupted into riots, resulting in 3 deaths and 20 injuries. Facebook responded by reducing the distribution of frequently reshared messages, changing policies to protect vulnerable groups and fight voter suppression, increasing staffing, strengthening local fact-checking partnerships, and undergoing human rights impact assessments.

There have also been multiple accusations of Facebook inciting terrorism on its platform (Facebook’s response).

Hard Tradeoffs

Facebook has almost 3 billion monthly active users across Facebook, Instagram, and WhatsApp, covering ~40% of all humans alive today. Facebook is a global company, which means decisions need to be filtered through a global context, not a US-centric one.

Use of technology for good and evil

The pollyannish assumption of technology companies has been assuming that technology is a de facto force for good. There are great reasons to believe that. Technology creates value, replacing legacy processes with much more capable, efficient, and delightful user experiences. It’s not zero-sum.

Even though I fundamentally believe technology creates value, the use of that value for good is never guaranteed. To quote Ben Thompson (replacing YouTube with Facebook).

Facebook previously operated under the assumption that 1) Facebook has too much content to review it all and 2) The best way to moderate is to depend on its vast user base. It is a strategy that makes perfect sense with the pollyannish assumption that the Internet by default produces good outcomes with but random exceptions.

A far more realistic view — because again, the Internet is ultimately a reflection of humanity, full of both goodness and its opposite — would assume that of course there will be bad content on Facebook. Of course there will be extremist videos recruiting for terrorism, of course there will be child exploitation, of course there will all manner of content deemed unacceptable by the vast majority of not just the United States but humanity generally.

Since 2016, Facebook has invested considerably in counter-acting spam, misinformation, election manipulation, hate speech, child pornography, violence, identity theft, terrorism, and drug trafficking with artificial intelligence tools, content moderators, and coordinated efforts with national security experts.

I believe Facebook has the best intentions in mind. There is inherent good to policing and catching bad behavior instead of letting it live in darkness on other platforms. Though Facebook can try to design its products to counteract bad behavior, as long incentives exist for coordinated malicious behavior, there will always be people looking to use technology for evil. It is also impossible to pre-program the entire universe of possibilities of negative behavior. There will always be some that slip through safeguards, and the reduction of friction gives access to both good people and bad people

The implication: the mere existence of technology freely available for use will result in some negative outcomes.

Free vs. paid products

Facebook is a non-rival technology product with zero marginal costs, meaning it costs virtually $0 to serve an additional user, and consumption does not prevent the consumption of others (like food).

As a social network, Facebook also has powerful built-in network effects. The more people use the service, the more valuable it is to people. What’s the use of a messaging platform if there is no one to message? Facebook has large incentives to bring on as many people as possible to use the service to cement their competitive advantage over other companies.

But it comes with costs. The price to open a fake account is free. The price to post content that calls for violence is free. The price to create terrorist groups, add new members, and coordinate on the platform is free. Coordinated inauthentic behavior becomes much more expensive when it costs $5 / month per fake account opened.

Simultaneously, every user’s attention is available to the highest bidder. Despite efforts to avoid discrimination, the nature of Facebook’s ad delivery system to optimize for return on spend for advertisers ensures biased outcomes. In a research paper presented at CSCW 2019:

We find that skewed delivery can occur due to the content of the ad itself (i.e., the ad headline, text, and image, collectively called the ad creative). For example, ads targeting the same audience but that include a creative that would stereotypically be of the most interest to men (e.g., bodybuilding) can deliver to over 80% men, and those that include a creative that would stereotypically be of the most interest to women (e.g., cosmetics) can deliver to over 90% women. Similarly, ads referring to cultural content stereotypically of most interest to Black users (e.g., hip-hop) can deliver to over 85% Black users, and those referring to content stereotypically of interest to white users (e.g., country music) can deliver to over 80% white users, even when targeted identically by the advertiser. Thus, despite placing the same bid on the same audience, the advertiser’s ad delivery can be heavily skewed based on the ad creative alone.

Our society has shifted to a world after capital, where the only scarcity left is our attention, and we are giving that up to Facebook every single day. Though I recognize that Facebook’s product and business model is well suited for free products, there is an inherent cost to free.

Arbiters of truth (censorship) vs. free expression

Zuckerberg has said Facebook won’t be ‘arbiters of truth’. He has called for stronger internet regulation of harmful content, data protection, and political advertising. He has steadfastly stood for free expression over censorship.

Trump’s tweets on ‘looting and shooting’ are testing this principle at its core. As a left-leaning state, California has been notorious for its dislike of Trump. Silicon Valley has been repeatedly accused of biasing platforms against Republicans due to the liberal bias of its workforce. For people who dislike his policy, censoring Trump’s message seems like an easy answer.

However, if you extend this stance on censorship globally, you’ll realize an unelected group of engineers, product managers, and executives in Silicon Valley is deciding what should and should not be censored for the entire world. If it sounds like I am disparaging myself and my entire profession, trust your instincts. When Facebook initially responded in Myanmar, they designated a Rohingya insurgent group, the Arakan Rohingya Salvation Army, as a dangerous organization, while taking no action against the Tatmadaw, the organization responsible for the ethnic cleansing of the Rohingya.

We don’t get censorship right every time, and there is no way we possibly could.

Free expression gives us the power of dissent. It gives us the power and platform to debate ideas. It’s a core principle of American democracy. But it can be weaponized. Is Trump’s call for a use of state force considered a call for violence, especially when said state forces have been filmed unjustly murdering Black Americans, especially when the phrase for ‘when the looting starts, the shooting starts’ was previously used by White Supremacist George Wallace?

Though Trump has been a wholly ineffective leader, I don’t think he is evil. Don’t attribute this to malice when it is adequately explained by stupidity. He is also still the President of the United States, one of the most powerful positions on Earth. You could argue Facebook should decide it has had enough, demote his messages, and perhaps even ban him, signaling that it wants nothing to do with Trump’s ideology and message, even though he could easily move to another platform.

Deciding truth also has a second cost, which is confirming the theories that liberal tech companies are biased against conservatives. This will inevitably lead to which is the drowning of dissenting opinions. Dissent is uncomfortable by definition. Censorship is like taping over your opponent’s mouth during an argument. Blaming Facebook is equivalent to blaming the debate moderator that they didn’t do it for you.

What now?

Truth is so desperately important to our society. It’s the foundation of progress. We can’t give up the truth to support and amplify the negative externalities of free expression. It’s too consequential, too valuable.

What we need is discourse. To be able to talk out our ideas, reason about the truth, and make difficult decisions and judgments about morally ambiguous questions. And most of all, to build empathy with one another. Polarization, black and white, and ‘us vs. them’ politics stems from a lack of understanding of the other side. It comes from a belief that there are only two world views instead of an infinite spectrum.

Our current products aren’t helping. Algorithmic feeds have bias. Selling our attention to the highest bidder is also selling away our beliefs. The structure of digital discourse is currently built to promote engagement, not truth. There is a huge opportunity to support a platform that helps us promote great ideas, stories, and solutions over divisive rhetoric.

Some potential ideas to enhance discourse:

Transform social networks into protocols, allowing entrepreneurs to develop their own creative solutions on top of communication networks, much like innovation over email.

Improve detection of coordinated inauthentic behavior and bots.

Help civic leaders hold structured discussions on important policies and content.

Move away from the posts model to a forum model with moderators, tags, polls, and live video discussions, easily indexable and searchable.

Promote voices that amplify authenticity and rational expertise, and reduce amplification of highly negative rhetoric in news feeds.

Give people tools to get their messages in front of more diverse groups of people outside of our core follower networks.

Add prompts for posters about to post divisive content warning about negative rhetoric.

Give post authors and group leaders tools to moderate their groups and comments at scale.

I do believe technology can be designed to promote good, but I don’t have all of the answers, and I hope that discourse can bring out those answers we desperately need. Optimism in the face of adversity is America’s greatest superpower, and I hope we can make it out of this crisis more loving, more understanding, and more capable of supporting people unlike ourselves.

If you enjoyed this post, please subscribe and share this with others!