Recruiting with robots

Will large language models replace recruiters?

In this piece, I analyze the capabilities of large language models for recruiting. What powers do they have? What does the trend line look like? Do recruiters need to start changing their careers? There are three sections which you can peruse at your leisure.

From theory — what are the strengths and weaknesses of AI?

From history — how has technology automated work in other industries?

From imagination — what would we build if we had a magic wand and no constraints?

In my next post, I’ll also explore from code. I’ll try to build what I’m proposing and see how far I get. Let’s get started!

From theory

What are the strengths and weaknesses of AI?

So far, we’ve seen large language models pass exams, analyze data, write code, generate stories, and even act as your girlfriend. More traditional forms of AI can underwrite loans, recognize objects in images, and organize the content of your newsfeed. None of these tasks are mission critical yet. We are only starting to trust self-driving cars. But they operate within very strict boundaries with high degrees of skepticism.

The hype around Large Language Models does have merit. It unlocks a new capability for entrepreneurs. We can now build bots which take orders and make judgements from natural language. The coding community likes to call these bots “agents.” I prefer “high schoolers on steroids.” These 15 year old equivalents are available 24/7 to do whatever we command with reasonable intelligence. These include tasks like summarization, categorization, translation, proofreading, formatting, content generation, and verification. But it does still get facts and figures wrong. It needs guidance and correction. It is 93% right, but we don’t know why the other 7% is wrong.

A big reason why is how LLMs are trained. They use the whole corpus of internet documents as training data. They use those patterns to replicate and generate natural language responses to user questions. But it is not actually reasoning like it appears to be. It does not know truth from lies, harm from harmlessness, equality from bias. People much smarter than I are actively working on better, more robust language models.

This pattern matching falls short when AI tries to answer brand new questions. AI passes the bar exam, but under artificial constraints. If the same question is found in the training data, the AI has the answers on its cheat sheet. It pattern matches appropriately to be indistinguishable from a human answer. When AI tries to answer altered questions outside of the training data, it fails.

How does this apply to recruiting? These tasks can be broken down into a few key areas:

Sourcing — recruiters generate inbound applicants through in-store campaigns, job board posts, cold emails and LinkedIn messages, and referral programs

Screening — recruiters scan resumes and conduct interviews. They determine whether a candidate is a good fit before passing the candidate to later stages.

Selling — recruiters present offers to candidates and sell them on the company’s key benefits.

Admin — recruiters coordinate interviews, do data entry, supervise the recruiting process, and follow-up with candidates.

None of this is rocket science. A well-intentioned capable high school student could learn how to do each of these tasks. In theory, AI could automate this. But past attempts at using AI were criticized heavily because of their visible bias.

From Reuters:

Amazon’s AI penalized resumes that included the word “women’s,” as in “women’s chess club captain.” And it downgraded graduates of two all-women’s colleges, according to people familiar with the matter causing Amazon to shut down the program.

I think there’s still hope. From Avi Goldfarb, the author of “Power and Prediction”:

There are very good reasons to worry that compared to a perfect benchmark, that machine learning tools and AI discriminate, say in credit scoring and in hiring. But why do they discriminate? They discriminate typically because they’re mimicking human processes and humans discriminate. So the reason I’m optimistic that eventually we’ll move to AI on many of these processes isn’t because I think AI’s so great. It’s because humans are pretty awful. Because we’re so bad at these kinds of things, it’s going to be hard to ignore how good the machines are.

AI might actually be less biased than humans. Imagine that. AI receives criticism because it just happens to be more transparent. We can analyze them up, left, and sideways. It leaves an audit trail. It’s an algorithm which can be graded, judged, and fixed. But visible doesn’t mean worse. It is much harder to change human behaviors than to fix an algorithm.

Theory suggests an AI recruiter is possible. Let’s see how history has proven this out.

From history

How has technology automated work in other industries?

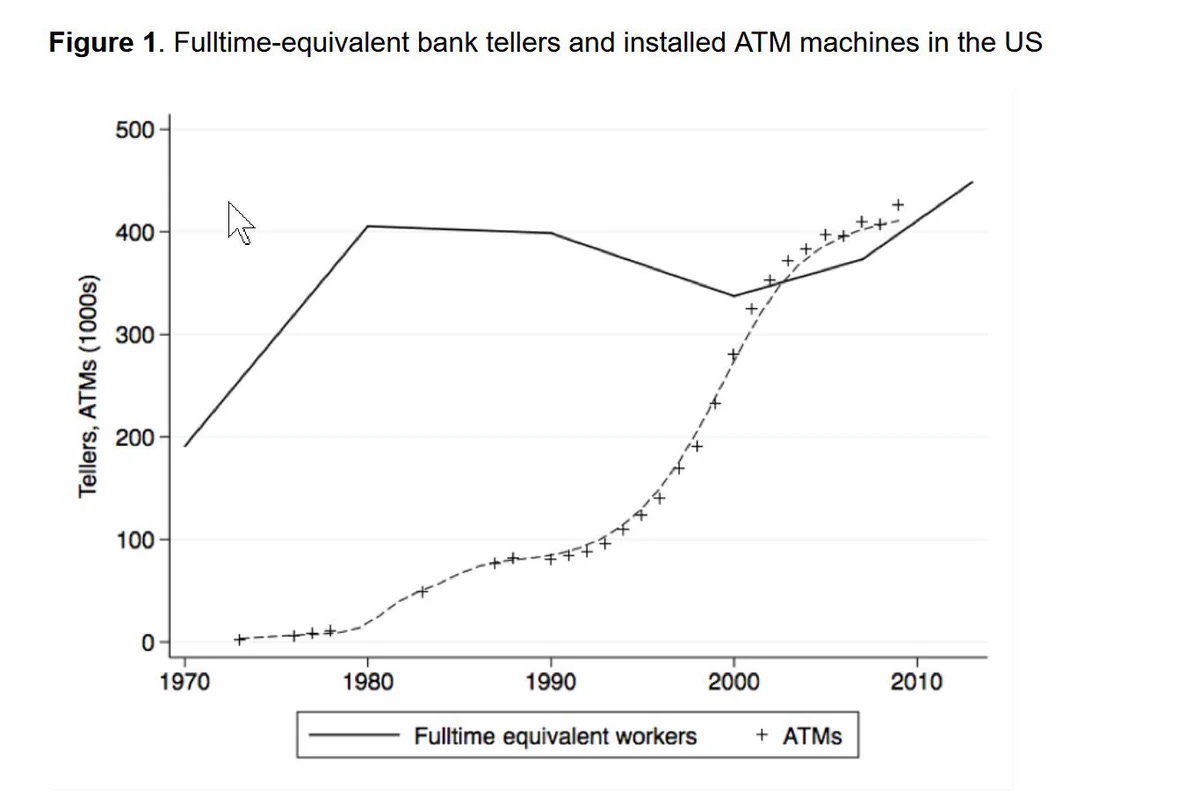

This chart continues to surprise me. Just one of the 270 jobs in the 1950 census has been eliminated by automation... elevator operator. Technology does not remove jobs — rather it changes the job description. In my post on “The Meaning of Work” I talk about how better technology creates higher expectations and work expands to fill the available time. When the original work is automated away, it drives productivity up and costs down. It increases demand for the good produced and increases the number of workers needed.

AI doomsayers parade around media outlets to shout at the sky. “Super-intelligence is coming! Skynet is going to take over the WORLD. We need to start regulating this and building committees!” But it turns out, artificial intelligence has existed since the 1950s. Even back then, AI could play checkers, chat with you, solve math problems, and make decisions. Every time a new kind of intelligence emerges, we change the parameters of what it means to be intelligent. We adapt and a new status quo emerges.

Arthur Clarke, a science fiction writer, is commonly cited for saying, “any sufficiently advanced technology is indistinguishable from magic.” Consider all the technologies in our lives that were once considered magic — electricity, digital money, music and video streaming, global video calling, telehealth, on-demand taxi service, global turn by turn directions, and voice assistants. The Amazon Go store doesn’t even need a checkout anymore.

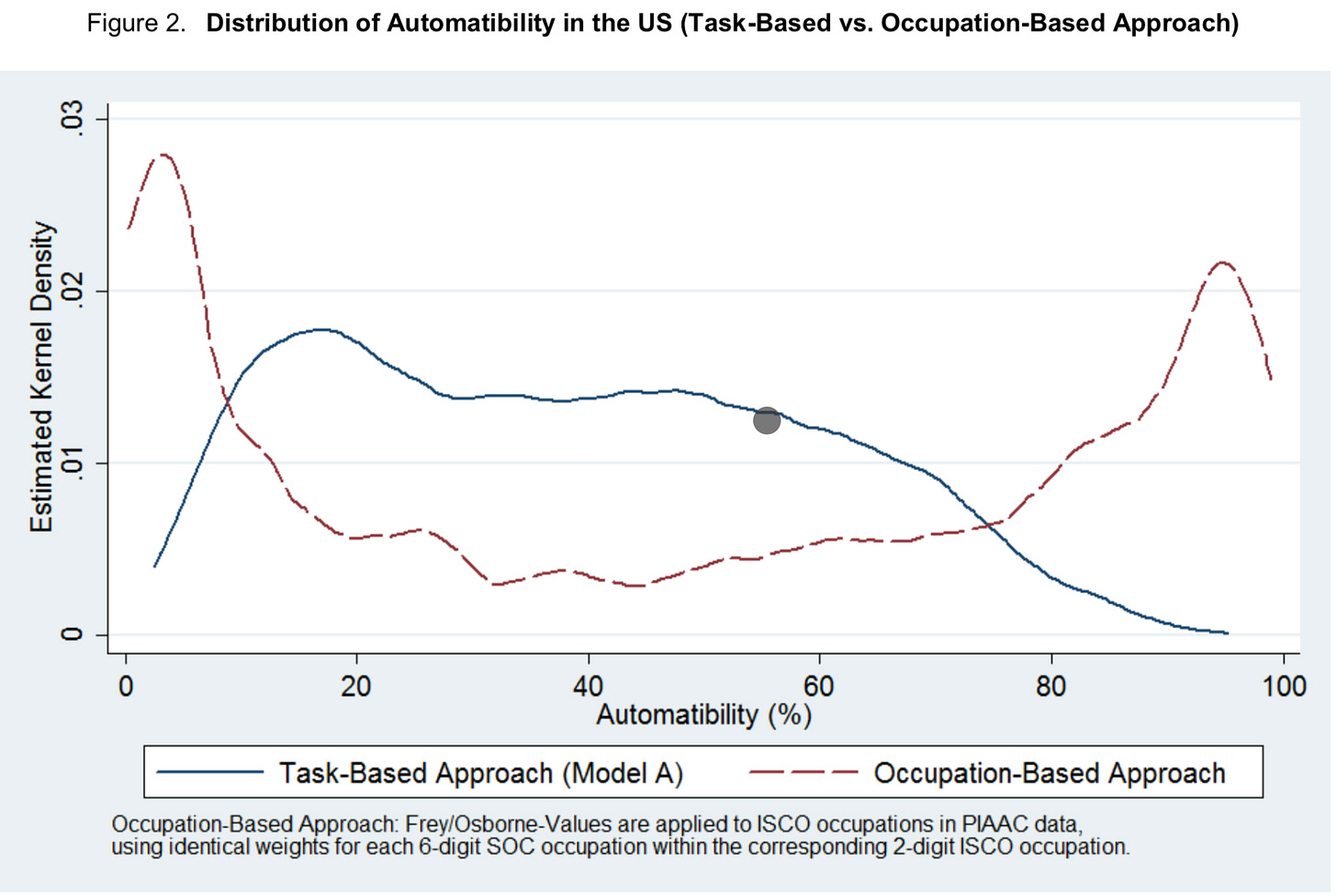

Despite these advances in automation, the jobs they were meant to automate didn’t go away. In fact, they lowered the cost to serve, which increased demand. Think about how many people now book a taxi compared to the yellow cab era. And when diving a bit deeper into automating tasks instead of automating occupations, it paints a very different picture. The chart below outlines the % of work that can be automated away when considering tasks that can be automated vs. occupations.

Computers excel at automating routine cases, but falter at automating special cases. Despite the installation of many self-checkout kiosks, the number of cashiers in a store has actually increased. Self-driving will likely have the same effect on trucking. Truckers may still need to be employed to sit in a self-driving vehicle in case errors occur. Who is going to rescue the vehicle if it stops in the middle of nowhere?

Cases in other industries are no different. AI transcription accuracy is very high and very cheap. This would indicate the employability of medical scribes would be in decline. But I would bet that new transcription technology has actually increased transcription overall. With cheaper and greater access comes more usage. Many private practices likely could not afford a scribe. They asked their doctors, coordinators, and nurse practitioners to do all the transcription by hand or forego it at all.

History shows that automation increases productivity, but has a marginal impact on employment. Instead, it changes the role and the type of work. Let’s imagine what the future might look like if AI is already embedded within recruiting.

From imagination

What would we build if we had a magic wand and no constraints?

I explore many of these ideas in my post on “Creating a new jobs marketplace.” Here, I want to specifically focus on using AI. For both job seekers and recruiters, what are the admin tasks that are the most ripe for automation?

Imagine using AI for:

Job searching — let AI help select opportunities job seekers want.

Sourcing — let AI decide where the spend and allocate money to source applicants and candidates.

Resumes — let AI generate the resumes for candidates, and grade the resumes for recruiters.

Interviews — let AI transcribe the interviews and generate takeaways.

Assessments — let AI grade freeform candidate answers for correctness

References — let AI transcribe and analyze reference calls to find underlying patterns

Compensation — let AI decide candidate pay

Offer decision — let AI decide whether to give an offer to a candidate

I imagine (7) and (8) are giving you shivers. Is it right to trust an AI to actually determine those? Should we have a human in the middle to decide first? Likely AI can make suggestions, but won’t be relied on to be the final decision maker. That trust will need to be earned. And we will have to be quite careful about how this context will be presented to decision makers.

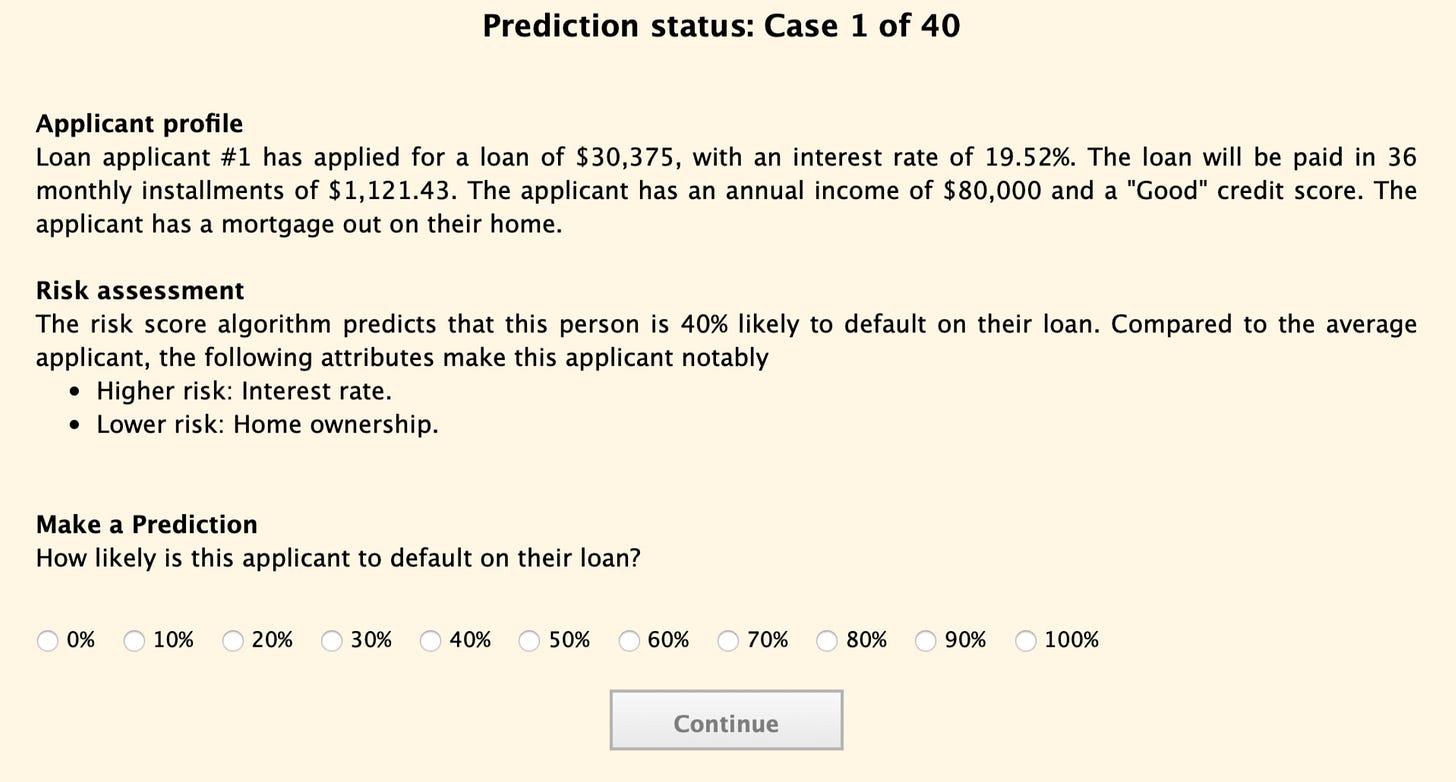

In 2019, Harvard graduate students Ben Green and Yiling Chen presented a paper on “The Principles and Limits of Algorithm-in-the-Loop Decision Making.” Today, companies use algorithms today to model risk, determine credit scores, and predict human behavior. The paper writers asked participants to make a similar prediction — how likely was a loan applicant to default based on a narrative profile along with a computer generated risk score.

The results found that participants 1) were unable to effectively evaluate the accuracy of their own or the risk assessment's predictions, 2) did not calibrate their reliance on the risk assessment based on the risk assessment's performance, and 3) exhibited bias in their interactions with the risk assessment. There is still tremendous power in designing interfaces correctly. Good interfaces teach people how to correctly evaluate their decision making and use data in the right way.

Takeaways

AI is going to automate many tasks away from recruiters. In many instances, it will do them even better than recruiters. They are available 24/7, can spend infinite time on a task, and reply with answers instantly. However, partial automation is not the same as full automation. Tools will be built to supercharge recruiter productivity, rather than replace them outright. Trust will need to be built and earned through careful design considerations to use AI for hiring.

In my next post, I’m going to try to build AI recruiting tools and see how far I can get!

If you enjoyed this post, please share it with others and subscribe!